Amazon Nimble Studio is a new service that creative studios can use to produce visual effects, animations, and interactive content entirely in the cloud with AWS, from the storyboard sketch to the final deliverable. Nimble Studio provides customers with on-demand access to virtual workstations, elastic file storage, and render farm capacity. It also provides built-in automation tools for IP security, permissions, and collaboration.

Traditional creative studios often face the challenge of attracting and onboarding talent. Talent is not localized, and it’s important to speed up the hiring of remote artists and make them as productive as if they were in the same physical space. It’s also important to allow distributed teams to use the same workflow, software, licenses, and tools that they use today, while keeping all assets secure.

In addition, traditional customers resort to buying more hardware for their local render farms, rent hardware at a premium, or extend their capacity with cloud resources. Those who decide to render on the cloud navigate these options and the large breadth of AWS offerings and perceive complexity, which can lead them to choose legacy approaches.

Today, we are happy to announce the launch of Amazon Nimble Studio, a service that addresses these concerns by providing ready-made IT infrastructure as a service, including workstations, storage, and rendering.

Nimble Studio is part of a broader portfolio of purpose-built AWS capabilities for media and entertainment use cases, including AWS services, AWS and Partner Solutions, and over 400 AWS Partners. You can learn more about our AWS for Media & Entertainment initiative, also launched today, which helps media and entertainment customers easily identify industry-specific AWS capabilities across five key business areas: Content production, Direct-to-consumer and over-the-top (OTT) streaming, Broadcast, Media supply chain and archive, and Data science and Analytics.

Benefits of using Nimble Studio

Nimble Studio is built on top of AWS infrastructure, ensuring performant computing power and security of your data. It also enables an elastic production, allowing studios to scale the artists’ workstations, storage, and rendering needs based on demand.

When using Nimble Studio, artists can use their favorite creative software tools on an Amazon Elastic Compute Cloud (Amazon EC2) virtual workstation. Nimble Studio uses EC2 for compute, Amazon FSx for storage, AWS Single Sign-On for user management, and AWS Thinkbox Deadline for render farm management.

Nimble Studio is open and compatible with most storage solutions that support the NFS/SMB protocol, such as Weka.io and Qumulo, so you can bring your own storage.

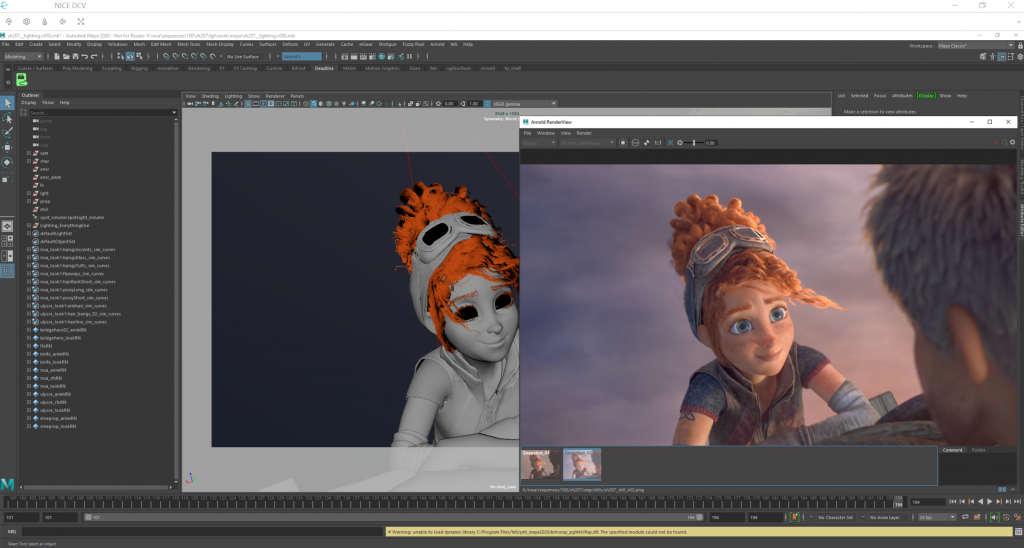

For high-performance remote display, the service uses the NICE DCV protocol and provisions G4dn instances that are available with NVIDIA GPUs.

By providing the ability to share assets using globally accessible data storage, Nimble Studio makes it possible for studios to onboard global talent. And Nimble Studio centrally manages licenses for many software packages, reduces the friction of onboarding new artists, and enables predictable budgeting.

In addition, Nimble Studio comes with an artist-friendly console. Artists don’t need to have an AWS account or use the AWS Management Console to do their work.

But my favorite thing about Nimble Studio is that it’s intuitive to set up and use. In this post, I will show you how to create a cloud-based studio.

Set up a cloud studio using Nimble Studio

To get started with Nimble Studio, go to the AWS Management Console and create your cloud studio. You can decide if you want to bring your existing resources, such as file storage, render farm, software license service, Amazon Machine Image (AMI), or a directory in Active Directory. Studios that migrate existing projects can use AWS Snowball for fast and secure physical data migration.

Configure AWS SSO

For this configuration, choose the option where you don’t have any existing resources, so you can see how easy it is to get started. The first step is to configure AWS SSO in your account. You will use AWS SSO to assign the artists permissions based on some roles to access the studio. To set up AWS SSO, follow these steps in the AWS Directory Services console.

Configure your studio with StudioBuilder

StudioBuilder is a tool that will help you to deploy your studio simply by answering some configuration questions. StudioBuilder is available in an AMI. You can get it for free from the AWS Marketplace.

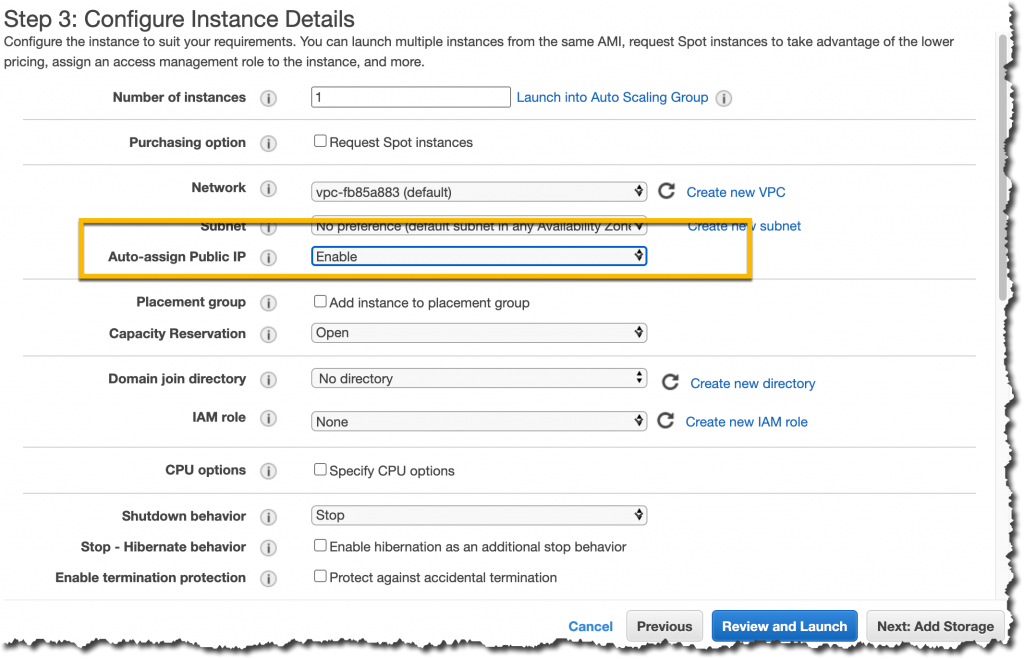

After you get the AMI from AWS Marketplace, you can find it in the Public images list in the EC2 console. Launch that AMI in an EC2 instance. I recommend a t3.medium instance. Set the Auto-assign Public IP field to Enable to ensure that your instance receives a public IP address. You will use it later to connect to the instance.

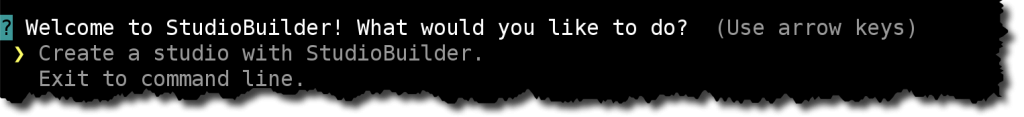

As soon as you connect to the instance, you are directed to the StudioBuilder setup.

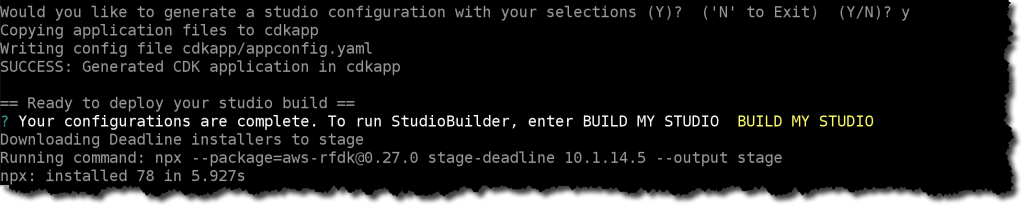

The setup guides you, step by step through the configuration of networking, storage, Active Directory, and rendering farm. Simply answer the questions to build the right studio for your needs.

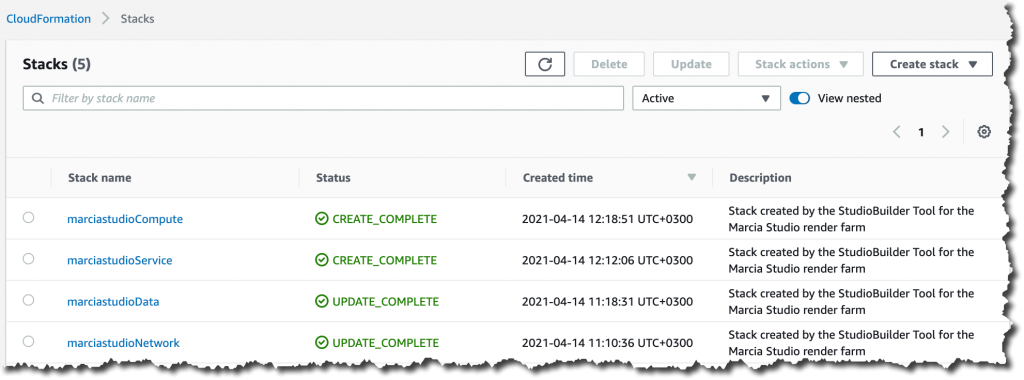

The setup takes around 90 minutes. You can monitor the progress using the AWS CloudFormation console. Your studio is ready when four new CloudFormation stacks have been created in your account: Network, Data, Service, and Compute.

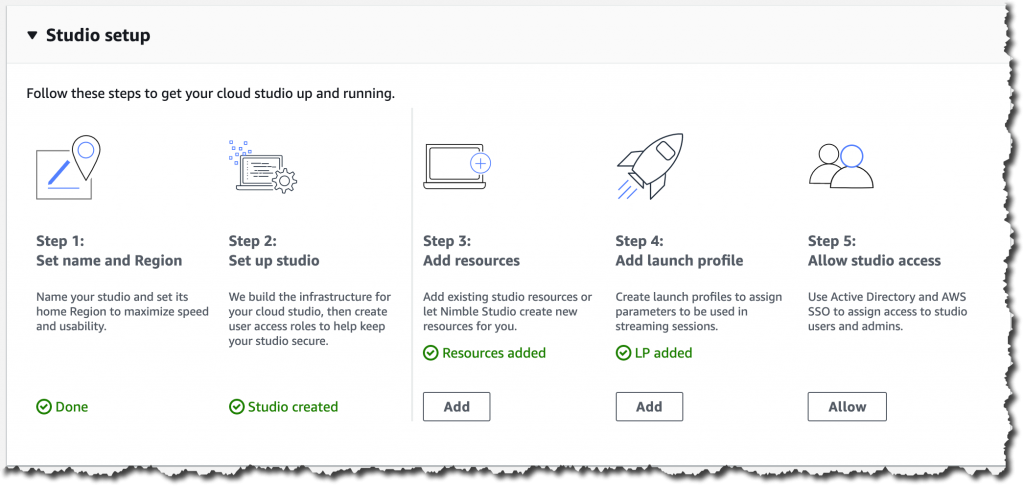

Now terminate the StudioBuilder instance and return to the Nimble Studio console. In Studio setup, you can see that you’ve completed four of the five steps.

Assign access to studio admin and users

To complete the last step, Step 5: Allow studio access, you will use the Active Directory directory you created during the StudioBuilder step and the AWS SSO you configured earlier to allow access to the administrators and artists to the studio.

Follow the instructions in the Nimble Studio console to connect the directory to AWS SSO. Then you can add administrators to your studio. Administrators have control over what users can do in the studio.

At this point, you can add users to the studio, but because your directory doesn’t have users yet, move to the next step. You can return to this step later to add users to the studio.

Accessing the studio for the first time

When you open the studio for the first time, you will find the Studio URL in the Studio Manager console. You will share this URL with your artists when the studio is ready. To sign in to the studio, you need the user name and password of the Admin you created earlier.

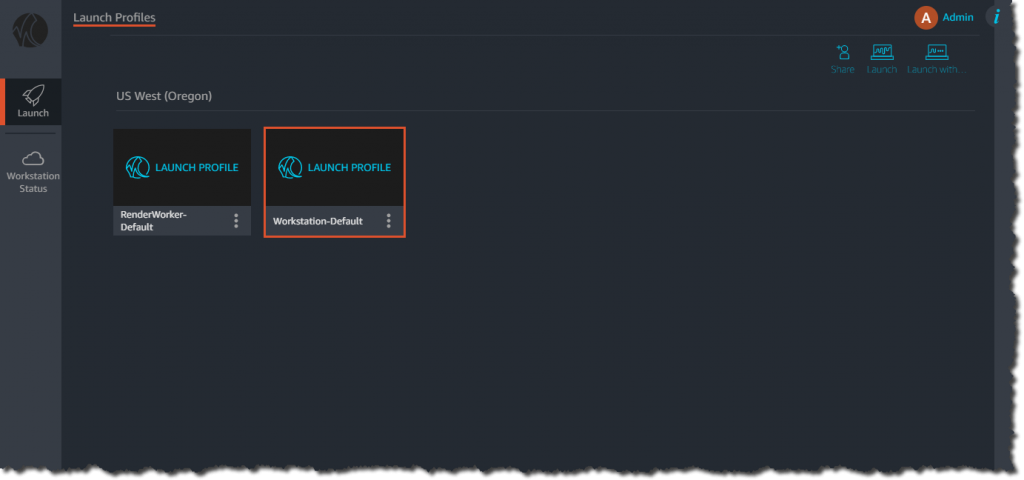

Launch profiles

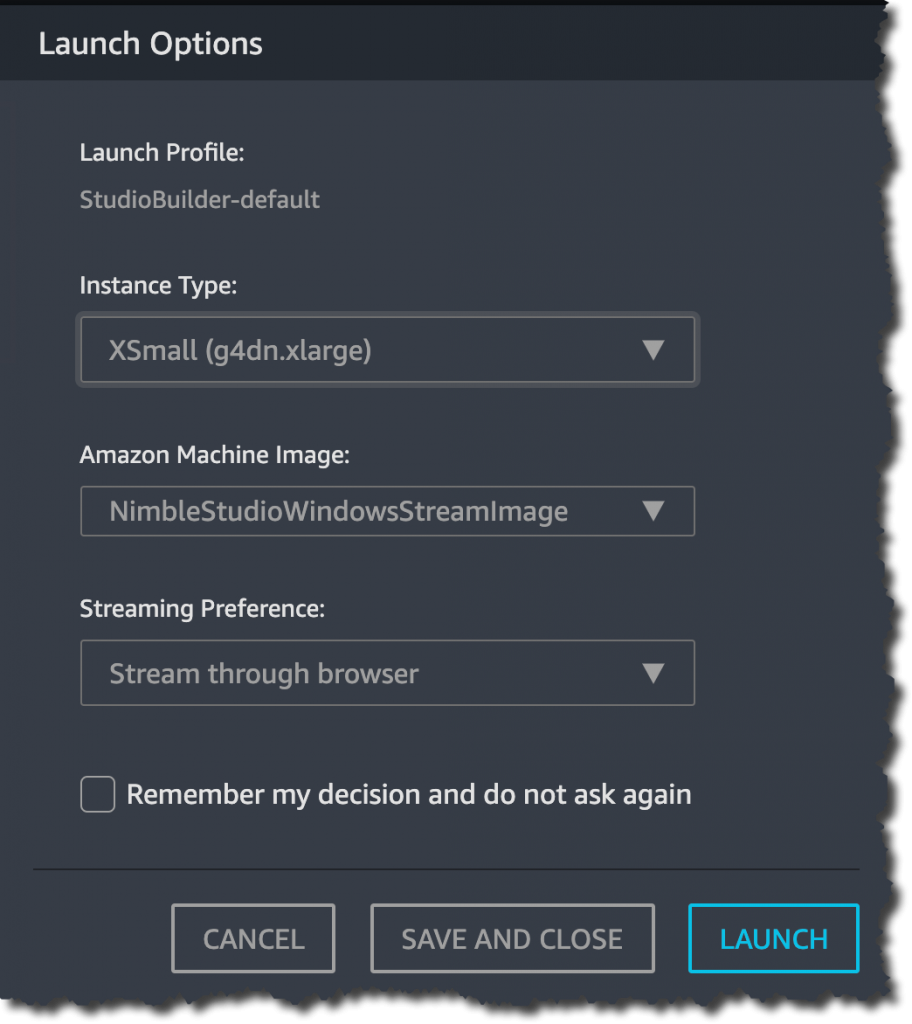

When the studio opens, you will see two launch profiles: one that is a render farm instance and the other that is a workstation. They were created by StudioBuilder when you set up the studio. Launch profiles control access to resources in your studio, such as compute farms, shared file systems, instance types, and AMIs. You can use the Nimble Studio console to create as many launch profiles as you need, to customize your team’s access to the studio resources.

When you launch a profile, you launch a workstation that includes all the software you provided in the installed AMI. It takes a few minutes for the instance to launch for the first time. When it is ready, you will see the instance in the browser. You can sign in to it with the same user name and password you use to sign in to the studio.

Now your studio is ready! Before you share it with your artists, you might want to configure more launch profiles, add your artist users to the directory, and give those users permissions to access the studio and launch profiles.

Here’s a short video that describes how Nimble Studio can help you and works:

Available now

Nimble Studio is now available in the US West (Oregon), US East (N. Virginia), Canada (Central), Europe (London), Asia Pacific (Sydney) Regions and the US West (Los Angeles) Local Zone.

Learn more about Amazon Nimble Studio and get started building your studio in the cloud.

— Marcia

![]()