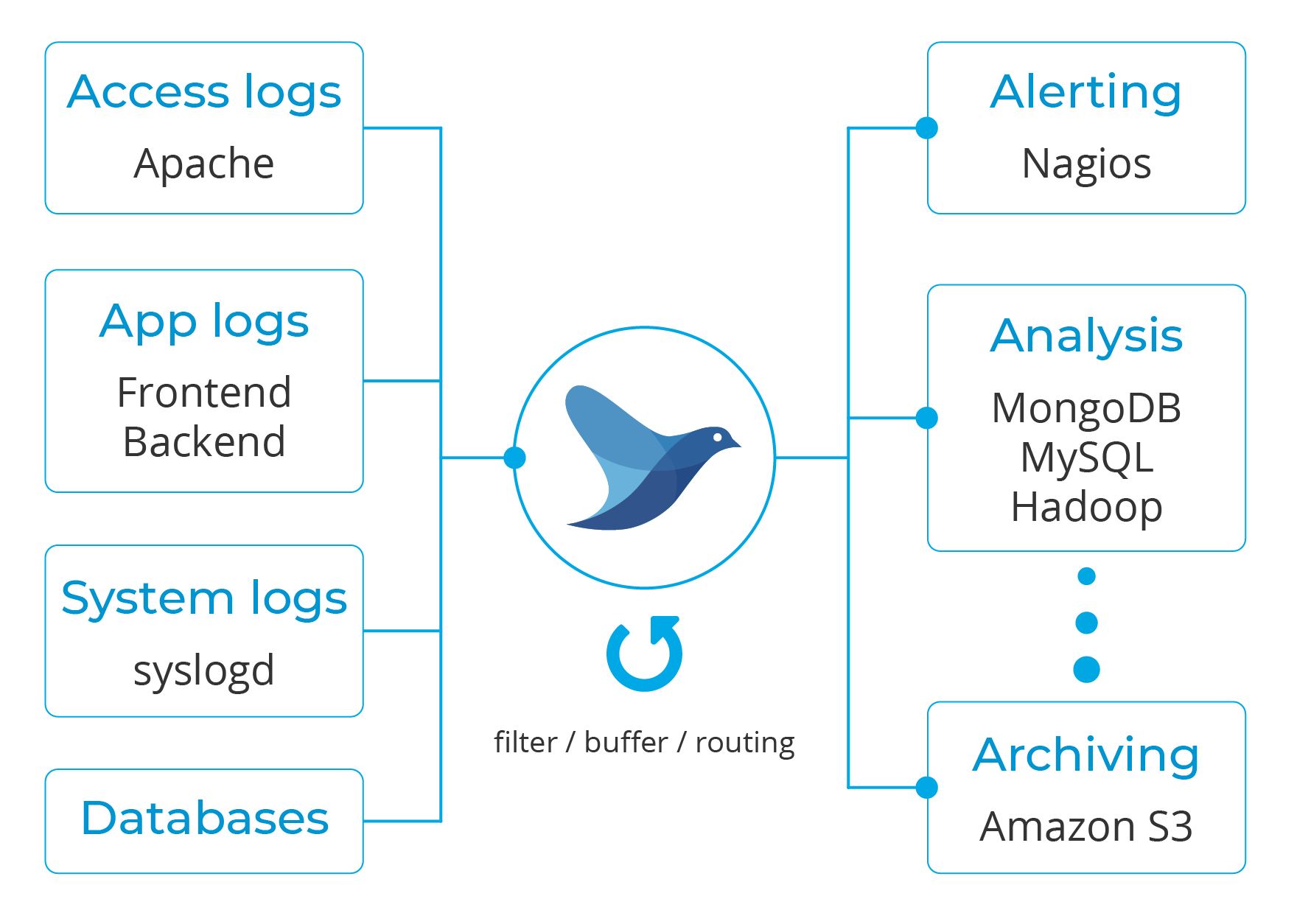

Fluentd is an open source data collector for semi and un-structured data sets. It can analyze and send information to various tools for either alerting, analysis or archiving.

The main idea behind it is to unify the data collection and consumption for better use and understanding. It is also worth noting that it is written in a combination of C language and Ruby, and requires very little system resources.

A vanilla instance runs on 30-40MB of memory. For even tighter memory requirements, check out Fluent Bit.

As part of this unified logging, it converts data to JSON to gather all facets of processing log data, which makes Wazuh alerts a good match for it.

The downstream data processing is much easier with JSON, since it has enough structure to be accessible while retaining flexible schemas.

Moreover, it has a pluggable architecture that, as of today, has more than 500 community-contributed plugins to connect different data sources and data outputs.

Wazuh Fluentd forwarder

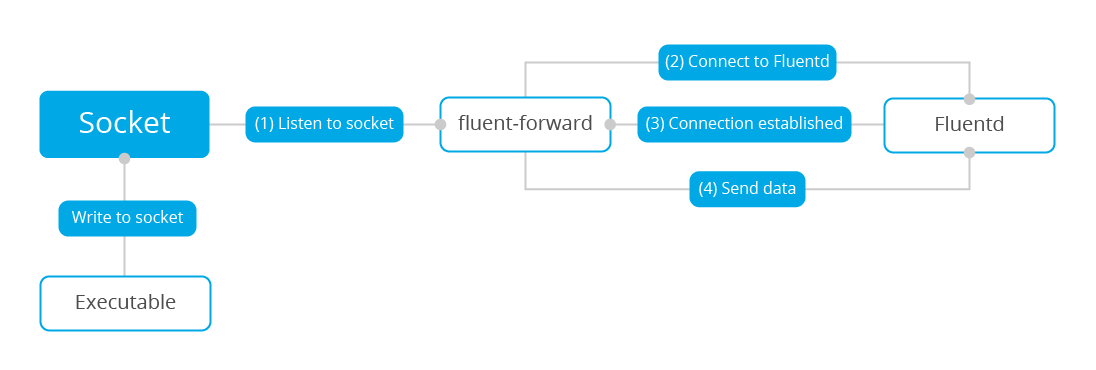

Wazuh v3.9 introduced the Fluentd module, which allows the forwarding of information to a Fluentd server. This is a diagram depicting the dataflow:

Configuration

The settings can be divided into input and output. These are the main ones you can use:

Input

socket_path. Dedicated UDP socket to listen to incoming messages.tag. The tag to be added to the messages forwarded to the Fluentd server.object_key. It packs the log into an object, which value is defined by this setting.

Note: The socket is meant to be a Unix domain UDP socket.

Output

address. Fluentd server location.port. Fluentd server port.shared_key. Key used for server authentication. It implicitly enables the TLS secure mode.

You can find all of the different settings in the documentation.

Sample configuration

This is a TLS enabled example:

<fluent-forward> <enabled>yes</enabled> <socket_path>/var/run/fluent.sock</socket_path> <address>localhost</address> <port>24224</port> <shared_key>secret_string</shared_key> <ca_file>/root/certs/fluent.crt</ca_file> <user>foo</user> <password>bar</password> </fluent-forward>

Hadoop use case

Hadoop is an open source software designed for reliable, scalable, distributed computing that is widely used as the data lake for AI projects.

One of its key features is the HDFS filesystem as it provides high-throughput access to application data, which is a requirement for big data workloads.

The following instructions show you how you can use Wazuh to send alerts, produced by the analysis engine in the alerts.json file, to Hadoop by taking advantage of the Fluentd module.

Wazuh manager

Add the following to the manager configuration file, located at /var/ossec/etc/ossec.conf, and then restart it:

<socket> <name>fluent_socket</name> <location>/var/run/fluent.sock</location> <mode>udp</mode> </socket> <localfile> <log_format>json</log_format> <location>/var/ossec/logs/alerts/alerts.json</location> <target>fluent_socket</target> </localfile> <fluent-forward> <enabled>yes</enabled> <tag>hdfs.wazuh</tag> <socket_path>/var/run/fluent.sock</socket_path> <address>localhost</address> <port>24224</port> </fluent-forward>

As illustrated previously, Wazuh requires:

An UDP socket. You can find more about these settings.The input. For this use case you will use log collector to read the Wazuh alerts with thetargetoption, which forwards them to the previously defined socket instead of the Wazuh analysis engine.Fluentd forwarder module. It connects to the socket to fetch incoming messages and sends them over to the specified address.

Fluentd

You need to install the td-agent, which is part of Fluentd offering. It provides rpm/deb/dmg packages and it includes pre-configured recommended settings.

For you to ingest the information in HDFS, Fluentd needs the webhdfs plugin. By default, this plugin creates several files on an hourly basis. For this use case though, this behavior must be modified to convey Wazuh’s alerts in realtime.

Edit the td-agent’s configuration file, located at /etc/td-agent/td-agent.conf, and add the following, then restart the service:

<match hdfs.wazuh>

@type webhdfs

host namenode.your.cluster.local

port 9870

append yes

path "/Wazuh/%Y%m%d/alerts.json"

<buffer>

flush_mode immediate

</buffer>

<format>

@type json

</format>

</match>

match. Specify the regex or name used in the defined tags.host. HDFS namenode hostname.flush_mode. Use immediate to write the alerts in realtime.path. The path in HDFS.append. Set to yes to avoid overwriting the alerts file.

You can read more about Fluentd configurations here.

Hadoop

The following section assumes that Hadoop is already installed. You can follow the official installation guide.

As mentioned before, HDFS is the storage system for Hadoop. It is a distributed file system that can conveniently run on commodity hardware and it is highly fault-tolerant.

Create a folder in your HDFS to store Wazuh alerts:

hadoop fs -mkdir /Wazuh

Enable append operations in HDFS by editing the /usr/local/hadoop/etc/hadoop/hdfs-site.xml file, and then restart the whole cluster:

<property> <name>dfs.webhdfs.enabled</name> <value>true</value> </property> <property> <name>dfs.support.append</name> <value>true</value> </property> <property> <name>dfs.support.broken.append</name> <value>true</value> </property>

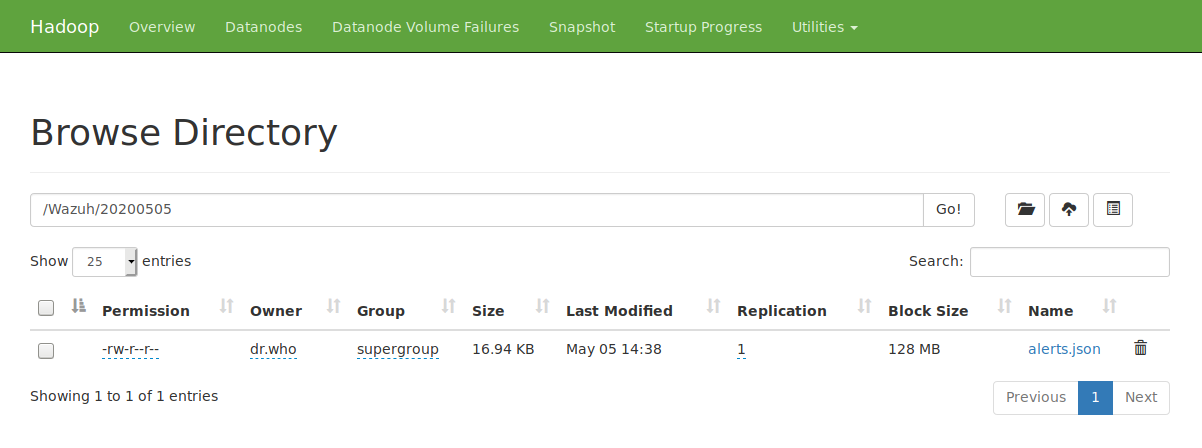

At this point, all the Wazuh alerts will be stored in realtime in the defined path /Wazuh/DATE/alerts.json:

[hadoop@hadoop ~]$ hadoop fs -tail /Wazuh/20200505/alerts.json

2020-05-05 12:31:27,273 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

12:30:56","hostname":"localhost"},"decoder":{"parent":"pam","name":"pam"},"data":{"dstuser":"root"},"location":"/var/log/secure"}"}

{"message":"{"timestamp":"2020-05-05T12:30:56.803+0000","rule":{"level":3,"description":"Active response: wazuh-telegram.sh - add","id":"607","firedtimes":1,"mail":false,"groups":["ossec","active_response"],"pci_dss":["11.4"],"gdpr":["IV_35.7.d"],"nist_800_53":["SI.4"]},"agent":{"id":"000","name":"localhost.localdomain"},"manager":{"name":"localhost.localdomain"},"id":"1588681856.35276","cluster":{"name":"wazuh","node":"node01"},"full_log":"Tue May 5 12:30:56 UTC 2020 /var/ossec/active-response/bin/wazuh-telegram.sh add - - 1588681856.34552 5502 /var/log/secure - -","decoder":{"name":"ar_log"},"data":{"srcip":"-","id":"1588681856.34552","extra_data":"5502","script":"wazuh-telegram.sh","type":"add"},"location":"/var/ossec/logs/active-responses.log"}"}

Similarly, you can browse the alerts in your datanode:

Conclusion

The Fluentd forwarder module can be used to send Wazuh alerts to many different tools.

In this case, sending the information to Hadoop’s HDFS enables you to take advantage of big-data analytics and machine learning workflows.

References

- Fluentd.

- Fluent Bit.

- Fluentd data sources.

- Fluentd data outputs.

- Fluentd webhdfs plugin.

- Fluentd configuration.

- Td-agent installation guide.

- Wazuh Fluentd forwarder.

- Wazuh Fluentd forwarder documentation.

- Wazuh socket documentation.

- Wazuh log collection.

- Hadoop.

- Hadoop installation guide.

If you have any questions about this, join our Slack community channel! Our team and other contributors will help you.

The post Forward alerts with Fluentd appeared first on Wazuh.